Friday, September 24, 2010

Bird Mortality in the Alberta Oilsands

Ground Water Depletion

“All the water that will ever be is, right now.” (National Geographic 1993)

As the years pass, the consumption of water on our planet has increased dramatically. Humans across the globe have been pulling out ground water faster than ever imagined; as a result the water ends its cycle into oceans. This increase of water consumption has tampered with our ability and capacity of sustainable development. The increase in population and the changes in lifestyles are few of many factors that have resulted in the billions of gallons of water wasted.

In the following article the primary source titled "Groundwater depletion rate accelerating worldwide" found in the issue of Geophysical Research letters is being compared to the brief secondary source found on an online journal titled "Groundwater depletion rate said doubled". The primary source begins to explain the impact groundwater depletion has on rising sea levels. Similarly, the secondary source uses facts and numbers to gain the audiences interest. The primary source explains and gives reasons to why the sea level is actually rising (in this case evaporation, and precipitation of the water). It goes on to break down the potential consequences of misusing the privilege of what used to be an untapped resource. The article emphasises that the water being drawn has accounted for 25% of the annual sea level rise across the planet. The study goes on to compare the estimates of groundwater added by rain to the amounts being removed for agriculture and other uses. This was done by collecting a database of global groundwater information including maps of groundwater regions and water demand. Since the total amount of the world’s groundwater is not known it becomes very hard to estimate how fast the global supply will run out, but according to scientists if water was drained as rapidly from the great lakes they would go bone-dry in around 80 years (Marc Bierkens 2010).

The secondary source on a similar note seems to be getting the reader’s attention by going straight to the facts. The article repeatedly quotes parts thought to be important in the primary source. Such an example would be right in the opening paragraph where the writer quotes, “Findings published in the journal Geophysical Research Letters say water is rapidly being pulled from fast-shrinking subterranean reservoirs essential to daily life and agriculture in many regions... The rate at which global groundwater stocks are shrinking has more than doubled between 1960 and 2000.” The article gives a brief summary of the actual content and gives an emotional appeal to the topic by underlining major points and interesting statistics.

On the contrary, major differences between the two sources are quite obvious. The two sources are both conveying the same message, but in a very different manner. As stated above, the secondary source comes onward to keep the attention of the reader. This kind of writing is often aimed at certain audiences, whereas the primary sources comes forward in a more scientific manner, by laying out the evidence and discussing cause and affects. As stated in the primary article, ground water embodies about 30 percent of the available fresh water on the planet, with surface water accounting for only one percent. The rest of the potable, irrigation friendly supply is locked up in glaciers or the polar ice caps. This means that any reduction in the availability of groundwater supplies could have undesirable effects for a growing human population. The research also further goes into the possible solutions of the matter, all of which was left out in the secondary article. Such kind of paraphrasing often leaves behind important details or even accentuates the less important points. This is the most prevalent flaw languages have, that every time something is translated or re-written it almost always loses part if not most of its meaning. On a larger scale, these kinds of differences can have more serious consequences, not always as short sighted as wanting to hold onto the reader’s attention. It is important to take advantage of such observations, to appreciate the importance of proper scientific method and to apply this change in future writings.

To conclude, both sources are speaking out to voice their concerns for a certain cause, in this case the depletion of ground water. They had their similarities and differences; the most evident was that the secondary source seemed to be a derivative of the primary source. It gave the reader a summary of the original material. A preferable alternative for someone who is not so passionate about the matter.

References

Primary:

"Groundwater Depletion Rate Accelerating Worldwide." PhysOrg.com - Science News, Technology, Physics, Nanotechnology, Space Science, Earth Science, Medicine. Web. 23 Sept. 2010.

Secondary :

"Groundwater Depletion Rate Said Doubled - UPI.com." Top News, Latest Headlines, World News & U.S News - Upi.com. Web. 23 Sept. 2010.

Why Hyenas Are Exclusively for Africa ?

In the secondary source the author describes the role of climate change as a crucial one in the extinction of hyenas from Europe. But he cites the same information twice in the paragraph. The same amount of words could have been employed to furnish other relevant details like one pointed out earlier by me. At the same time, the author also makes a blunder by citing a wrong information in the article. While earlier in the article he talks about the climate of southern Europe being extreme for hyenas to survive. Later in the article , he declares that southern Europe always had a climate which was within hyenas' tolerance range. This may really confuse the reader about the authenticity of the article. But the primary source put forth that southern Europe was always within hyenas tolerance range.

Refrences -

1- http://www.sciencedirect.com/science?_ob=ArticleURL&_udi=B6VBC-504CNMB-2&_user=10&_coverDate=08/31/2010&_rdoc=1&_fmt=high&_orig=search&_origin=search&_sort=d&_docanchor=&view=c&_acct=C000050221&_version=1&_urlVersion=0&_userid=10&md5=68878ddffaa9652e1282c4391520c4f7&searchtype=a

2-http://www.sciencedaily.com/releases/2010/09/100923105759.htm

3-http://journals2.scholarsportal.info.subzero.lib.uoguelph.ca/tmp/1758652581086860762.pdf

Thursday, September 23, 2010

A team of researchers led by U. Pöschl and S.T. Martin have just released their scientific report on Rainforest Aerosols and the air quality of the Amazon. The field experiment was called the Amazon Aerosol Characterization Experiment 2008 (AMAZE-08), the teams intent was to find and study air that was as uncontaminated as possible, with little or no pollutants from human interference. The scientists conducted this study in 2008 near Manaus, Brazil. The study took place during the wet season which takes place between February to March. It was done during the wet season because it demonstrates pre-industrial conditions, meaning it avoids most pollution emissions from surrounding regions. The team discovered that the aerosols that compose the rainforests cloud condensation were mostly constructed of secondary organic material, which is a result of the oxidation of the rich foliage found in the Amazon’s forests. This data varies greatly from what air composition were already assumed to be, mainly because most research done on our air quality contains some level of pollutants, no matter how little it may previously have thought to be. In this blog I will be comparing the information presented by my primary source (research team) and my secondary source (the media).

It is evident, very early on, that there are many differences between a primary and secondary source. One of the most predominate differences is the credibility of the secondary source. Often the author of the secondary source seems to make concrete claims that lack appropriate evidence to support them. This is displayed when the author states that the new information on the composition of the air particles will undoubtedly transform the way atmospheric changes are viewed and analyzed around the world. However, there is no substantial evidence to support that these findings will cause that great of a transformation in the scientific global community. Another claim that the secondary source seems to make with lack of affirmation, is that the research conducted will help scientists predict how deforestation affects the life on our planet. (Heimbuch 2010). This idea was never mentioned or supported by the primary scientific journal article, in fact it is merely an assumption the author created based on the primary source, and therefore not a valid fact.

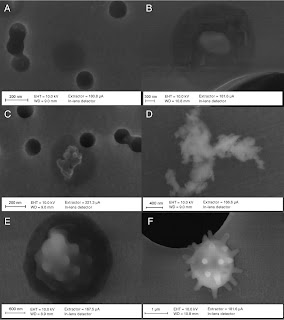

Fig. 1. Sample of particle types obtained by Amazon Aerosol Characterization Experiment 2008 (U. Pöschl et al. 2010).

The credibility of the secondary source is also affected by the lack of measurements and data presented about the findings of the experiment. For example, the actual measurement techniques and equipment used in the study by the scientists was never mentioned in the secondary source, nor did the author mention what the composition of the aerosol droplets consisted of. Unlike the primary source where all of the findings, equipment, data, measurements and techniques were discussed. Due to this reason, the primary source article was not as in depth and informative as the original paper.

Although the secondary source lacked many examples and measurable data, it fulfilled its purpose as a secondary source because it was directed to a different audience, compared to the primary source. The primary source is a scientific journal entry that is intended for a specific audience that is scientifically literate, as it is composed heavily of scientific terminology that the average person may have difficulty understanding. Thus, the secondary source is useful as it dissects the heavy information and projects it in layman terms so the average person can understand the study completed. Therefore the style of writing, including the formality of both articles vary greatly due to the different target audiences. Also the secondary source tends to make many assumptions and dramatizes much of the information, due to the lack of evidence used to support it. This is clearly evident in the title of the secondary source where it states that the research team is the first to successfully bottle the last pure air particles on Earth (Heimbuch 2010). Yet the original scientific report never affirmed that such a statement is true, and it also does not concretely claim to be the first study done on the subject.

Another difference between the primary and secondary sources, is the tone in which the material is presented. Not only does the language vary, but the underlining tone is distinct. The information presented in the primary source is very factual and not opinion based, due to the fact that it predominately consists of measurements taken and data collected, and not personal opinions. This slightly contradicts the secondary source because it tries to persuade the reader that the findings, although important, are monumental to developing new insights for our environment (Heimbuch 2010).

Fig. 2. The Amazonian Water and Aerosol cycle (U. Pöschl et al. 2010).

Fig. 2. The Amazonian Water and Aerosol cycle (U. Pöschl et al. 2010).

Pollution, specifically air pollution, has been a very controversial and important topic in the past few years especially with the rising issue of climate change. The Earth’s climate and atmosphere system are entirely based on and affected by the aerosols/particles it contains. Scientists are interested in finding out definitively what the human impact on our air quality means since the industrial revolution. In order to better predict climate change in the future, it is important not only to understand the anthropogenic impacts, but to also try to grasp a better understanding of the history of climate change from our past. It is conducive to hypothesizing more accurate predictions of what is to come. The more informed people are, the better the decisions they will make which will consequently affect the environment.

References:

1)Heimbuch, Jaymi. "Last Pure Air Particles on Earth Captured for Climate Science." TreeHugger. 22 Sept.2010. Web. 24 Sept. 2010.> http://www.treehugger.com/files/2010/09/last-pure-air-particles- on-earth-captured-for-climate-science.php>.

2) U. Pöschl, S. T. Martin, B. Sinha, Q. Chen, S. S. Gunthe, J. A. Huffman, S. Borrmann, D. K. Farmer, R. M. Garland, G. Helas, J. L. Jimenez, S. M. King, A. Manzi, E. Mikhailov, T. Pauliquevis, M. D . Petters, A. J. Prenni, P. Roldin, D. Rose, J. Schneider, H. Su, S. R. Zorn, P. Artaxo, M. O. Andreae. "Science/AAAS | Science Magazine: Sign In." Science/AAAS | Scientific Research, News and Career Information. 17 Sept. 2010. Web. 24 Sept. 2010.

Ozone Layer Fully Regenerated by 2050?

As I was searching for a secondary article on which to base my assignment, I happened to have stumble upon a short and simple article that stated that according to a UN report, the ozone layer could be regenerated by 2050. The title of this article is “Ozone layer is recovering, says UN” and supports the idea that the ozone layer is currently being regenerated. The article is roughly a page and a half long and references its primary source, the UN report, quite a lot. The original piece is titled “Scientific Assessment of Ozone Depletion: 2010” and is approximately 34 pages. Just the fact that this article could summarize in less than 2 pages what the UN report published in at least 34 was quite intriguing to me.

In the past two decades, the Protocol has been working to better the environment, although this being obvious, they have done this by working towards depleting harmful substances such as greenhouse gases, specifically carbon dioxide. This information all came from the original report that covers several specific gases that this project has been working at depleting. The original report goes into very elaborate detail as to the progress the protocol has accomplished, and in my opinion, the secondary source, a two page article, does not do the work justice.

The idea that the secondary article is presenting is, since the beginning of 2010, the breakthrough by the Montreal Protocol was noticed, but when reading the full report, it is clear that this process has been in action for more than the past two decades. This secondary resource does not take into account all the research that is presented in the primary resource. By not presenting this information, the article does not cover questions that the public might encounter. The article states that the regeneration of the ozone layer is being caused by the depletion of almost 100 substances used in day-to-day products. Due to the fact that the amount of time that this movement has been at work was not properly represented, many people reading the article might be worried about the replacement substances that are now being used and how these new substances may cause new and more dangerous problems. The fact of the matter is that the original substances that had been destroying the ozone layer in the first place have been continuously depleting for decades now. Although this particular aspect of the original report was not taken into account, the article did however incorporate the fact that the published report has been reviewed by around 300 scientists. Although, what the scientists that reviewed this article had to say about it was not mentioned.

Personally, I feel as though the secondary source does not appreciate the work that has been put towards bettering the ozone layer, depleting HCFCs, CFCs, halons, etc., which are most of the harmful substances affecting the ozone layer. What the treaty has accomplished is much more than just a simple change from the results of the Kyoto Protocol, but there have also been observations made towards climate change. In the primary report, UV radiation, climate change, and ozone layer regeneration are all of the topics touched upon, although the secondary source mainly focuses on the regeneration of the ozone layer. This is specifically how the secondary source took what it needed of the primary source, weakly summarized some of the information given by the original piece, and briefly mentioned the other topics touched upon in the original work by making it sound less important. For example, in the original report, they present the fact that there has been no significant change in UV irradiance levels since the late 1990s and the observations have also been consistent in the ozone column since then. The secondary source does not mention any of this.

As for climate change, the secondary source very vaguely explained what the primary source foresees. The primary source clearly presents with much detail, that “The global middle and upper stratosphere are expected to cool in the coming century” (pg 28), due to the increase in CO2, whereas the secondary source says, “Changes in climate are expected to have an increasing influence on stratospheric ozone in the coming decades, it says”. The secondary source does not go into any further detail as to how or why there will be an influence on the stratospheric ozone, it merely states that there will be a change... sure, but what kind?

All in all, I truly do not believe that the secondary source included enough information as to how or why there are going to be any changes in the ozone layer, and what the consequences and/or repercussions will be. By boldly stating such a strong argument and not backing it up, there can be a lot of doubt and worry created amongst the public which can in turn cause controversy, cause the article and company to lose its validity, and possibly even create doubt in the primary source all together.

By Eliza Solis Maart 0712280

Secondary: http://www.tgdaily.com/sustainability-features/51619-ozone-layer-is-recovering-says-un

Primary: http://www.unep.org/PDF/PressReleases/898_ExecutiveSummary_EMB.pdf

Playing with Fire in Order to Save the Eucalyptus Trees

(Image: Melanie Stetson Freeman/Getty)

The article states that without regular low-level fires, the eucalyptus tree cannot survive because these are the conditions it has needed to survive in the past. It then goes on to explain that without regular fires, dry rainforest type species are allowed to grow beneath the canopy of the eucalyptus trees which compete with the eucalyptus trees for water as well as contribute to a thick layer of litter that changes the chemistry of the soil which stresses the trees even further (Zukerman, 2010). There is a severe lack of evidence to support these statements. For example, there is no mention of any evidence that would explain why a layer of litter may affect the chemistry of the soil or how a thick layer of litter could changes the chemistry of the soil. It only tells the reader that it does. In the paper (primary source), there are specific examples of experiments done by other scientists that indicates “seedlings planted in plots that had been experimentally burnt by Withers and Ashton (1977) had survived, indicating that recruitment was possible where the thick litter layer was eliminated” (Close et al. 2010). The paper continues to explain that because of the thick layer of litter, “eucalypts have less access to P and/or cations as these elements become locked up in soil, litter and midstorey biomass.” (Close et al. 2010). This evidence that supports the statements made by the authors of the paper helps to increase the strength of their claims.

Also included in the paper was a table that illustrated the increased density of fire tolerant species and the decreased density of eucalypt species in Temperate Australia in the absence of fire.

The paper gave a wide variety of references to support the claims it was trying to make. The list of references to other research to back up the hypothesis of the paper is staggering which made the strength of the claim much greater. However, the article did not quite reach the standard that was set by the primary source it was based off of. The article did reference the paper written by Close et al. as well as two more sources to support any information that was not found in the primary source such as research from 2004 that states the eucalyptus trees need neutral to acidic soil in order suck up nutrients such as iron and manganese (Zukerman, 2010). Although the secondary source did not have nearly the amount of references to support its findings as the primary source did, the article did cover all its bases by referencing each paper it extracted information from.

Both the paper and the article spoke of a similar situation involving the North American pine. However, the primary source goes much more in depth by describing the situation in North America as well as giving references to further information on the research. The parallels between both the situation in Australia and that of North America are clearly stated in the primary source. An example of this could be “the increased development of midstorey vegetation resulting in the decline of fire tolerant tree species and the dominance of shade tolerant species and altered soil microclimate and microbial dynamics” (Close et al. 2010). The secondary source does not mention any of the reasons that the two situations are similar just that they are similar. This lack of evidence does not aid in strengthening the claims that are made in the article.

The limitations of the primary source are clearly stated in the introduction of the paper as well as in the conclusion. As stated in the paper by Close et al., “although anecdotal reports from forest and fire management authorities, coupled with our own observations, indicate that overstorey eucalypt decline is widespread in some forest types across temperate Australia, we currently lack quantitative information on the extent of decline and there is a clear need to investigate both the scale of the phenomenon and the ecological processes that underpin it. Emphasise is made on the fact that the research reported is site and species specific and that the model is thus applicable to only a sub-set of Australian ecosystems. For example some eucalypt species growing in relatively fertile soils and under high rainfall do not appear to exhibit premature decline with the development of a dense, shade adapted midstorey.“ (Close et al. 2010). As you can see, all the limitations of the paper are clearly stated allowing for no misunderstandings when someone reads this paper. However the article does not discuss or even mention any limitations of the work.

It is clear that there is a price to pay when moving from primary source to secondary source. Information supporting the main points is lost in order to create a shorter version of the original source. Media has to make the information more public friendly, that is to target a general audience and make the information easy to understand. In a primary source the information is targeted for a more specific group of individuals allowing for all the information to be included. A secondary source can allow for the reader to make assumptions about the information that is given due to lack of information about the limitations of the work and the strength of the claims is clearly compromise. However, a secondary source is a good place to learn a little about a subject of interest and intrigue the reader to learn more but if you want to understand it fully, getting all the correct facts, a primary source is the only way to do so.

References:

Close, D., Davidson, N., D. Johnson, D., Abrams, M., Hart, S., Lunt, I., Archibald, R., Horton, B. and Adams, M. (2009, April 3) . Premature Decline of Eucalyptus and Altered Ecosystem Processes in the Absence of Fire in Some Australian Forests.

Retrieved from http://www.springerlink.com/content/v05482100m4j622u/fulltext.html

Zukerman, W. (2010, September 16) . Receding gums: What ails Australia's iconic trees?.

Retrieved from http://www.newscientist.com/article/mg20727772.200-receding-gums-what-ails-australias-iconic-trees.html?full=true

Ozone Recovery

Adam Gibson

0705733

ENVS 1020

Recently, a secondary source article called “Ozone Layer is recovering, says UN” was found on TG Daily that was referring to a recent United Nations report regarding a peer reviewed primary source called “Scientific Assessment of Ozone Depletion: 2010.” While the article got all of its facts correct, there were several differences between the two.

TG Daily

TG DailyOne aspect that the primary and secondary source focused on was The Montreal Protocol on Substances that Deplete the Ozone Layer, and the difference was how much each of the sources talked about it. A very brief overview of The Montreal Protocol is that it was adopted in 1987 in order to prevent harmful ozone-depleting substances (ODSs) from reaching the ozone layer, by placing bans on numerous compounds that had been found in common household apparatus such as refrigerators and aerosols that had been widely used up until this time, and as a result, were depleting the atmosphere. While the secondary source only goes so far as saying that nearly 100 of these substances were banned, while the primary source goes into much detail regarding the success levels of banning these products.

According to the secondary source, it appears that for every substance that was banned in The Montreal Protocol has almost been completely depleted from the atmosphere. However, it is here that the primary source shows an alternate view. While many OSDs have dispersed exactly as predicted, there are still many more that take a very long time to disperse to an appreciable extent. In other words, it will take many more years before these ODSs are only in the atmosphere to a negligible extent. There’s also another very important point to remember; even though production on these ODSs has stopped, there are still stockpiles of some OSDs in the forms of old fridges, foams and other such products, especially in East Asia. It is because of this that the dispersal of one ODS called tropospheric chlorine was only approximately 66% as fast as predicted.

In addition to this, there are numerous compounds throughout this article that up until a couple of years ago, there have been no real changes in the concentrations of these compounds. However, with less and less of these certain ODSs being released into the atmosphere, it is leading to the beginnings of decreases to be found in the concentrations of these compounds.

According to both sources, the Montreal Protocol is helping to control the levels of Carbon Dioxide and other greenhouse gasses by the control over these ODSs. In fact, it is believed that thanks to the Montreal Protocol more than 10 gigatonnes. This is a value that was expressed in both the primary and secondary source not only for its size, but for the fact that this goal is five times larger than that of the Kyoto Protocol, which is a protocol designed specifically to deal with the reduction of greenhouse gases.

However, there was some not as positive information regarding an increase of concentration in some of these substances that wasn’t mentioned at all in the secondary article. Hydrochlorofluorocarbons (HCFCs) are increasing at a rate of 4.3% per year, which is faster than what has been observed in previous years.

In conclusion, the TG Daily article did a good job of presenting the basic arguments that were found in this primary source. While the results were not nearly as complete or as scientifically based as what they may have been, they did provide a direct link to the paper if a person was interested in reading up on this subject in more detail.

References:

· PRIMARY SOURCE: WNO/UNEP “Scientific Assesment of Ozone Depletion: 2010.” Scientific Assesment Panel of the Montreal Protocol on Substances That Deplete the Ozone Layer. September 16, 2010

http://www.unep.org/PDF/PressReleases/898_ExecutiveSummary_EMB.pdf

· SECONDARY SOURCE (and picture) Ozone Layer is Recovering, says UN. Emma Woolacott (TG Daily). September 20, 2010

http://www.tgdaily.com/sustainability-features/51619-ozone-layer-is-recovering-says-un

Bird Mortality at Tailings Ponds

Magazine articles or media reports are done to catch the audience’s attention. In this blog I will compare and contrast a primary source study and a secondary source news article. The article I located was called: “Birds Dying in Oilsands at 30 Times the Rate Reported, Says Study” written by Bob Weber at the Toronto Star. The article discussed differences between bird mortality rates before a study was done and after the study was completed. The study completed was: “Annual Bird Mortality in the Bitumen Tailings Ponds in Northeastern Alberta, Canada” done by Kevin P. Timoney and Robert A. Ronconi. The primary and the secondary sources of this information had many differences as well as similarities.

Some of the differences I noticed in the secondary article include: quotations were very small as opposed to the full sentences included in the primary source and much of the information was left out including numbers and some important procedure information. For example, there is one quotation where I believe the whole quotation would have been more effective for the readers understanding of the material. “It’s basically ad hoc” (Toronto Star 2010) is not located in the text word for word. The quotation in the primary source: “The ad hoc monitoring by industry, sanctioned by government, is inconsistent, cannot answer these questions, and undoubtedly underestimates actual mortality.” (Timoney and Ronconi 2010) I feel gives better understanding of the material. This is a large difference and putting in the original quote would have been more effective to show how the government needs to take responsibility on this issue. Another difference in the primary and secondary sources is the amount of information. However, I did expect lack of information because newspaper articles are suppose to be brief and to the point to keep the readers attention. Although I agree with this method of sparking people’s interest I understood the problem with mortality rates in birds much better after reading the primary source. Much of the full information including numbers and experimental procedures was left out. For example, the only numbers included in the article were the annual average before the study of 65 deaths per year and the average number after the study of 1973 deaths per year. However, in the study they were more specific saying that 43 different species of birds died over the years in tailings ponds as well as the numbers of average deaths. Also, I was upset to see that the fact that millions of birds migrate through the northeastern Alberta area each year was not mentioned. Comparing this to how many deaths there actually were is an important part of the study but it was not included in the newspaper article. Finally, there was also some procedure information that would seem important to a study that the newspaper article did not include. An example of this would be that Timoney looked at three separate ponds where as in the article they just say “ponds” (Toronto Star 2010). Also, I feel like it would be important to have included that 1.4 of the water was tailings or to include that birds dying before spring and fall migration were not included in the study. There seem to be a lot of differences but let’s look at similarities as well.

The similarities in the two articles include: information about inaccuracy problems and the writers seem to have the same opinion. I was pleased to see the newspaper article included the uncertainties in mortality estimation. The article includes that no observations of birds were done at night time and also birds that sank into the tailings ponds were not included because they cannot be seen. Also, it was mentioned in both that landing in the tailings can have a larger affect on endangered species or some birds that leave with contamination can bring it back to their habitat and infect others. I was also very impressed to see that the paper was not strengthening these claims to make it seems like the study done was very accurate. Although this does support that the mortality rate of birds is much higher then can be studied. This support makes the reader more concerned and thus, more interested in the article. Another large similarity I found was both Bob Weber and Timoney stated the facts and did not directly give opinions about how the government won’t take responsibility. Although from both of their writing it was easy to see they both felt the Government was not taking the responsibility he should be for the mortality rates on birds and they both felt that something more needed to be done to monitor and reduce the rates.

In conclusion, we see that a primary source study and a secondary source article are very different from one another but they are based on the same ideas. Its my opinion that to understand the situation you must read the primary source if nothing else.

References:

Kevin P. Timoney and Robert A. Ronconi (2010). Annual Bird Mortality in The Bitumen Tailings Ponds in Northeastern Alberta, Canada. The Wilson Journal of Ornithology

Bob Weber (2010). Birds Dying in Oilsands at 30 Times the Rate Reported, Says Study. The Toronto Star

Pictures:

http://www.thestar.com/news/sciencetech/environment/article/857638--birds-dying-in-oilsands-at-30-times-the-rate-reported-says-study

http://www.google.ca/imgres?imgurl=http://www.theepochtimes.com/n2/images/stories/large/2010/03/03/ducks2.jpg&imgrefurl=http://www.theepochtimes.com/n2/content/view/30744/&usg=__fRdhBUFbQctSKIST9EsPCtjL1UY=&h=501&w=750&sz=68&hl=en&start=0&sig2=_cOPLCAhMCMlkLkhCIAXVw&zoom=1&tbnid=8r5NExlh6ohNRM:&tbnh=125&tbnw=174&ei=oRqcTOqLCoP58AbIzs36DA&prev=/images%3Fq%3Dducks%2Btailings%26um%3D1%26hl%3Den%26biw%3D1291%26bih%3D540%26tbs%3Disch:1&um=1&itbs=1&iact=hc&vpx=481&vpy=76&dur=42&hovh=183&hovw=275&tx=175&ty=106&oei=oRqcTOqLCoP58AbIzs36DA&esq=1&page=1&ndsp=18&ved=1t:429,r:2,s:0

Oil Remains Deep Beneath The Gulf Of Mexico

After thinking that the BP oil spill in the Gulf of Mexico was finally coming to an end, a group of American scientists found a 35km long, clear plume of oil at the bottom of the ocean, 1100m below the surface. A large amount of (gamma)-proteobacteria was found in the hydrocarbon plume, which is closely related to the petroleum degraders that had been used to disperse the oil in the first place. They also found that there was a faster than normal rate of biodegradation of the hydrocarbons, even at 5 degrees Celsius. They found the half-life of the oil to be 1.2 – 6.1 days. They believe that it may be possible to use micro-organisms to remove the oil contaminants, even with the lack of oxygen that deep under the sea (Hazen, Dubinsky, et al. 2010). The problem is, since the oil plume has no colour, no one really knows if there are more plumes like this one in the Gulf.

John Wingrove, a writer for Globe and Mail had then written his article based on Hazen and Dubinsky’s findings. This article is considered a secondary source to Hazen and Dubinsky’s original journal. In his article, Wingrove talks about the fact that the U.S. government had been telling the public that the environmental issues concerning the BP oil spill were under control. But Hazen and Dubinsky’s findings say otherwise. They had been using chemical dispersants to break up the oil, about 6.8 million litres, which made the oil break apart and sink to the bottom. The plume was found and measured in June, and it was clear and odourless. During their study, Hazen and Dubinsky had found petroleum degraders in the oil plume. Luckily the plume was much deeper than where most fish live, but many other types of sea life will be affected. At the end of the article Wingrove states, “All [of the research] suggests a grim reality – the oil that leaked into the Gulf of Mexico won’t soon be gone” (Wingrove 2010). The problem is, there is still oil out in the Gulf of Mexico, it will take years to fully degrade, and we are still not aware of impacts it could have on the environment.

As you can see from this information, the primary source is a journal that has been written by the original scientists who carried out the experiment/study. All of their ideas are based on their own facts, and it was all recorded at the time of their study. A secondary resource is an article that has been written based on a primary study. In this case, the secondary source was written by John Wingrove, a writer for the globe and mail. He took information from Hazen and Dubinsky, and wrote it in words for the public to understand. The primary source is written in scientific language that the average person without a science background would not understand.

In this case, both authors were trying to convey the same message. The only difference was Wingrove had added a lot more political information. He mentioned the U.S. government several times throughout the article, even though Hazen and Dubinsky had not mentioned it once. Hazen and Dubinsky were just stating their scientific research and nothing more. Since Wingrove was writing strictly to the public, he added more information and statistics that they would be interested in, instead of just pure observation and analysis. In any case, the primary source is always the most reliable, because it is straight from the researchers themselves. You have to be careful when taking information from a secondary source because anyone could have written it, and added some of their own information, whether it is true or false. Overall I think that it is beneficial to read both a primary and a secondary source when researching information because the secondary source gives you a brief understanding of the topic, while the primary source gives you an in-depth analysis with data to back it up.

References

Hazen, Terry C., Dubinsky, Eric A. et al. (2010, August 2). Deep-Sea Oil Plume Enriches Indigenous Oil-Degrading Bacteria. Science Magazine.10.1126/science.1195979.

Primary Source:

www.sciencemag.org/cgi/rapidpdf/science.1195979v2.pdf

Wingrove, Josh. (2010, August 20). Oil Remains Deep Beneath The Gulf Of Mexico, Study Shows. Globe and Mail.

Secondary Source:

http://www.theglobeandmail.com/news/world/americas/oil-remains-deep-beneath-the-gulf-of-mexico-study-shows/article1679281/